Migrating Glovo’s dispatching service from a single machine to a distributed system

At Glovo, we have one vision: Connecting our customers to their cities. We do that by facilitating access to different services (like restaurants, supermarkets, and other stores) through a single mobile application and a fleet of independent couriers.

Anything you want. Delivered in minutes.

Of course, that is easier said than done. Behind the scenes, there are hundreds of factors that affect the experience of our customers and couriers.

One of the key factors in this equation is who is the perfect courier to deliver an order. This is very important for us because finding the best match means our customers will receive their orders faster, our couriers will deliver more orders every hour (and earn more money), and our partners will be able to receive more orders.

Answering that question is the main job of our dispatching team. And more specifically: the job of Jarvis.

Meet Jarvis, our Dispatching Service

Jarvis is Glovo’s dispatching service. Its goal is to make cities as efficient as possible. I’d say Jarvis stands out from other services in three different ways:

- Its domain is very mathematical. We are running an optimisation problem based on estimations we get from machine learning models (e.g. how much time will it take the courier to reach a point? When will the partner have the order ready?).

- Its behavior is different in every city. Each city has its own geography, its own demographic distribution, its own traffic… and, most importantly, its own personality. We need to account for those differences; adjusting thresholds, activating different heuristics, and so on.

- During peak hours, our highest-volume cities will have tens of thousands of couriers in different areas and states. At the same time, we will have hundreds of new orders coming in every minute. We need to be able to evaluate every possible combination of orders and couriers, and choose the best match in real-time, so high performance is critical.

From pets to cattle

A few months ago, we had a big problem: Jarvis was one of the most critical pieces in our system. However, it was also one of the most fragile.

This is how everything worked back then:

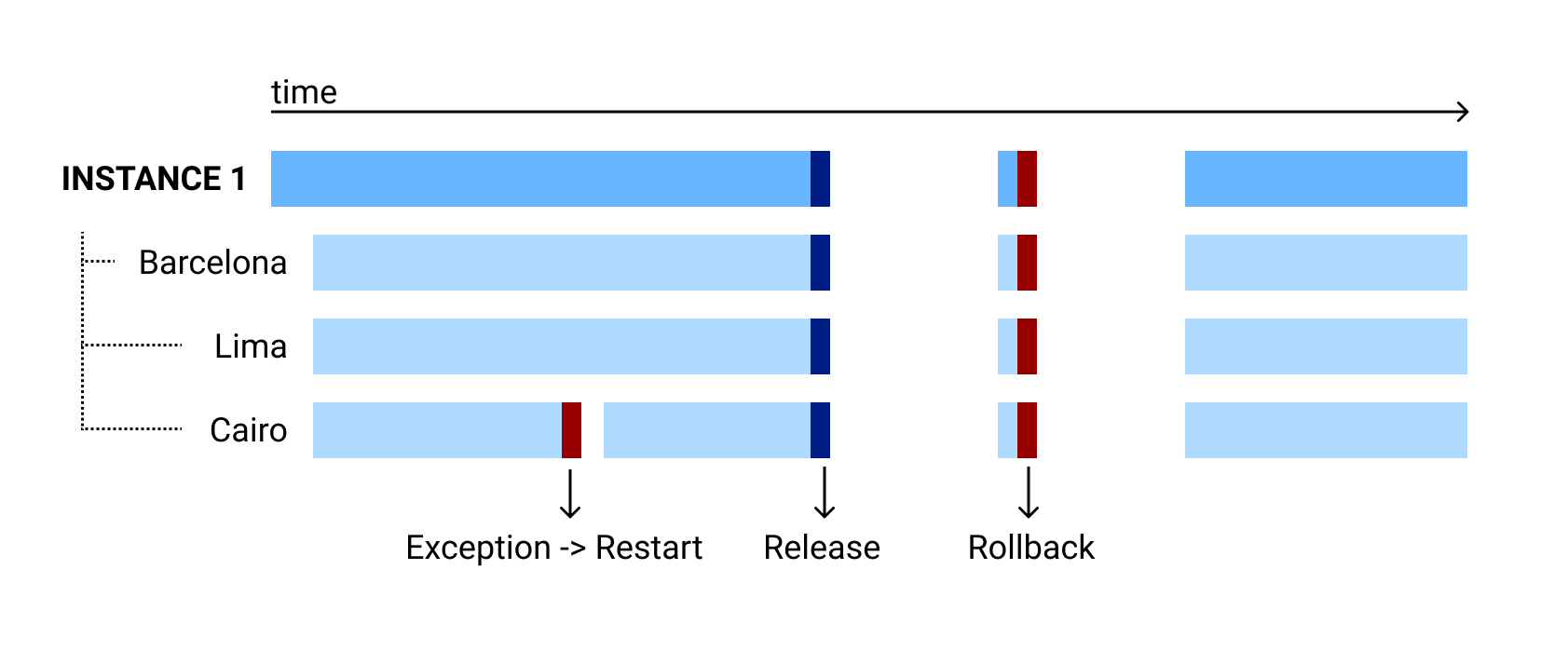

We used the actor model to run hundreds of concurrent actors. Each actor would control a particular city. Our main problem was that two actors could not be in charge of the same city, as they could potentially make conflicting decisions. This meant we could not distribute the service: we needed to rely on a single physical machine.

As a result, every time we deployed a new version we would have a few minutes of downtime, increasing our delivery time (one of our most important KPIs). If the version was faulty and we needed to roll back, we would have twice as much downtime.

Last quarter, we decided to change this and transition from a we-have-a-single-machine-please-cross-your-fingers architecture to a highly available, distributed architecture.

The task was at the same time daunting and very interesting. We had to make some fundamental changes to our system, but it was absolutely essential to make them gradually, without disrupting the service at all. To put it another way: we needed to change the plane’s engine in the middle of the flight.

Whiteboard session No.1: Requirements

The first thing we did was gather the whole team and come up with a list of requirements. Roughly speaking:

- The service should be distributed. At any time, there should be multiple machines in different availability zones or regions, competing to run the assignment problem for a city.

- It is vital that we ensure the service’s liveness: if a machine dies while making decisions for a particular city, it should not prevent other machines from picking up that city in the future.

- The decisions we make must not cause conflicts (e.g. we don’t want to assign two orders to a courier at the same time). Therefore, two tasks for the same city may not run concurrently or, if they do, both should not publish the decisions.

- We should strive for simplicity. Jarvis is a critical piece of our system. The more technical complexity we add, and the more services we depend upon, the higher the chance of service unavailability.

Whiteboard session No.2: (And beyond…)

We don’t want to make this post overly long and, to tell the truth, we would be really glad to hear from you and how you would approach this problem! So let’s leave it here for now.

In the next article, we’ll follow up with our approach, with a couple of surprising plot twists that made us redefine our solution.